Since jQuery 1.8, using $.ajax has always assumed, and defaulted to asynchronous. Meaning that you shouldn't block loading the page based on external calls.

Indeed, Rails 4 has also been thread safe. Meaning you should be able to have a threaded instance and support multiple connections without exploding.

However, this doesn't mean you can pull things to your hearts content.

I have a little application that calls it's own RESTful API zero to many times in a page load to get information to use. However, it's blocking on successive calls. I thought the issue was either the threading or async options mentioned about, but it's not. It's a little thing called HTTP Pipelining that's preventing me from having O(n) efficiency in my code.

Indeed, you should be able to set your chrome://flags to enable SPDY, which should, apparently, fix this problem. Except that SPDY is a Google thing, and isn't cross-browser (yet), and isn't exactly a solution to a website issue (you can barely get people to stop using IE7, let alone changing dangerous settings)

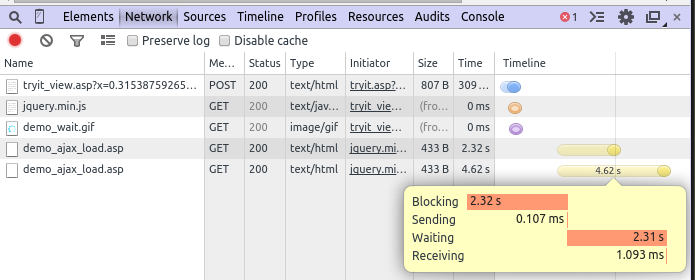

You can see what I mean here at w3schools. Make the following change to the default for their ajax complete tests.

$("button").click(function(){

$("#txt").load("demo_ajax_load.asp");

+ $("#txt2").load("demo_ajax_load.asp");

})

...

And then duplicate the div to create the #txt2 field.

Open the DOM Explorer (F12 in Chrome), hit the networking tab, and press your new button.

Because it's trying to go to the same local place twice, it's not threading the requests. This is a big issue when trying to have a system that relies on being asynchronous.

This will be fixed in HTTP 2.0, however. But given we're still on HTTP 1.1 and the HTML5 is all special now, I'm not sure of the timeline to have this fixed.